离线部署K8S集群

集群机器

| 主机名 | 角色 | IP地址 | 组件 | Docker版本 | K8S版本 | 系统 |

|---|---|---|---|---|---|---|

| master1.k8s.com | master | 192.168.234.51 | kube-apiserver kube-controller kube-scheduler kubelet kube-proxy | 20.10.18 | 1.23.0 | CentOS7 |

| master2.k8s.com | master | 192.168.234.52 | kube-apiserver kube-controller kube-scheduler kubelet kube-proxy | 20.10.18 | 1.23.0 | CentOS7 |

| master2.k8s.com | master | 192.168.234.53 | kube-apiserver kube-controller kube-scheduler kubelet kube-proxy | 20.10.18 | 1.23.0 | CentOS7 |

| node1.k8s.com | worker | 192.168.234.61 | kubelet kube-proxy | 20.10.18 | 1.23.0 | CentOS7 |

安装前准备

master节点离线部署配置ansible

# 以下命令在可以连接外网的服务器上执行

# 下载python3、pip3的安装包

mkdir ansible -p

cd ansible

yum install wget net-tools lrzsz python3 python3-pip -y --downloadonly --downloaddir=./

rpm -ivh *.rpm

#获取requirements.txt

wget https://raw.githubusercontent.com/kubernetes-sigs/kubespray/release-2.16/requirements.txt #2.9

#wget https://raw.githubusercontent.com/kubernetes-sigs/kubespray/master/requirements.txt #2.10

#下载requirements相关包

pip3 download -i https://pypi.tuna.tsinghua.edu.cn/simple -r requirements.txt

#以上命令为准备离线安装包

# 下载完成后拷贝到目标服务器执行安装

rpm -ivh python3-*.rpm libtirpc*.rpm

pip3 install --no-index --find-links=. -r ./requirements.txt

# 验证

ansible --version

ansible 2.9.20

config file = None

configured module search path = ['/root/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

ansible python module location = /usr/local/lib/python3.6/site-packages/ansible

executable location = /usr/local/bin/ansible

python version = 3.6.8 (default, Nov 16 2020, 16:55:22) [GCC 4.8.5 20150623 (Red Hat 4.8.5-44)]

# 添加hosts记录

echo 192.168.234.50 api-server >> /etc/hosts

echo 192.168.234.51 master1 >> /etc/hosts

echo 192.168.234.52 master2 >> /etc/hosts

echo 192.168.234.53 master3 >> /etc/hosts

echo 192.168.234.61 node1 >> /etc/hosts

# 配置免密登录(ansible机器执行)

ssh-keygen -t rsa

for i in master1 master2 master3 node1 ;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

# 配置ansible

mkdir /etc/ansible

cat > /etc/ansible/hosts << EOF

[k8s]

192.168.234.51

192.168.234.52

192.168.234.53

192.168.234.60

EOF

# 验证

ansible k8s -m ping

192.168.234.51 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

192.168.234.53 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

192.168.234.52 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

192.168.234.60 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python"

},

"changed": false,

"ping": "pong"

}

# copy /etc/hosts文件到所有节点

ansible k8s -m copy -a "src=/etc/hosts dest=/etc/hosts"

# 验证

ansible k8s -m shell -a "cat /etc/hosts"

192.168.234.51 | CHANGED | rc=0 >>

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.234.50 api-server

192.168.234.51 master1

192.168.234.52 master2

192.168.234.53 master3

192.168.234.60 node1

192.168.234.60 | CHANGED | rc=0 >>

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.234.50 api-server

192.168.234.51 master1

192.168.234.52 master2

192.168.234.53 master3

192.168.234.60 node1

192.168.234.53 | CHANGED | rc=0 >>

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.234.50 api-server

192.168.234.51 master1

192.168.234.52 master2

192.168.234.53 master3

192.168.234.60 node1

192.168.234.52 | CHANGED | rc=0 >>

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.234.50 api-server

192.168.234.51 master1

192.168.234.52 master2

192.168.234.53 master3

192.168.234.60 node1

系统基础配置

以下工具安装必须安装文档顺序执行,否则会出现依赖错误!!!

# 关闭防火墙,SELinux,swap分区

cat > /root/base.sh << EOF

#!/bin/sh

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=Disabled/' /etc/selinux/config

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

EOF

# 分发脚本到各个节点并执行

ansible k8s -m copy -a "src=/root/base.sh dest=/root/base.sh"

ansible k8s -m shell -a "sh /root/base.sh"

# 下载安装包到本地(在可以连接外网服务器执行)

mkdir /root/k8s && cd /root/k8s

yum install --downloadonly --downloaddir=./ ntpdate wget ntp httpd createrepo vim lrzsz net-tools ntpdate dos2unix gcc gcc-c++ device-mapper-persistent-data lvm2 ipvsadm ipset pcre pcre-devel zlib zlib-devel openssl openssl-devel libnl3-devel keepalived nc bash-completion

# 下载Nginx安装包

wget http://nginx.org/packages/centos/7/x86_64/RPMS/nginx-1.20.1-1.el7.ngx.x86_64.rpm

# 下载docker包

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

yum install --downloadonly --downloaddir=./ docker-ce

# 下载k8s包

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install --downloadonly --downloaddir=./ kubelet-1.23.0 kubeadm-1.23.0 kubectl-1.23.0

wget --no-check-certificate https://docs.projectcalico.org/manifests/calico.yaml

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml

# 将以上下载好的安装包打包放到master1 /opt/repo目录下,并制作制作本地的yum源

cd /opt/repo

# 安装createrepo

rpm -Uvh libxml2-2.9.1-6.el7_9.6.x86_64.rpm

rpm -ivh deltarpm-3.6-3.el7.x86_64.rpm libxml2-python-2.9.1-6.el7_9.6.x86_64.rpm python-deltarpm-3.6-3.el7.x86_64.rpm

rpm -ivh createrepo-0.9.9-28.el7.noarch.rpm

# 创建仓库

createrepo .

# 安装httpd

rpm -ivh apr-1.4.8-7.el7.x86_64.rpm

rpm -ivh apr-util-1.5.2-6.el7.x86_64.rpm

rpm -ivh httpd-tools-2.4.6-97.el7.centos.5.x86_64.rpm

rpm -ivh mailcap-2.1.41-2.el7.noarch.rpm

rpm -ivh httpd-2.4.6-97.el7.centos.5.x86_64.rpm

# 启动httpd服务

systemctl start httpd && systemctl enable httpd

ln -s /opt/repo /var/www/html/repo

# 此时就制作好了本地yum源

# 本地配置源文件

cat > /root/local.repo << EOF

[local]

name=local

baseurl=http://master1/repo/

enabled=1

gpgcheck=0

EOF

# 备份旧源文件

ansible k8s -m shell -a "mkdir /root/repo_bak && mv /etc/yum.repos.d/* /root/repo_bak"

# 分发源到所有节点

ansible k8s -m copy -a "src=/root/local.repo dest=/etc/yum.repos.d/local.repo"

# 验证

ansible k8s -m shell -a "yum repolist | grep local"

# 安装必备软件

ansible k8s -m shell -a "yum install -y ntpdate wget httpd createrepo vim lrzsz net-tools ntpdate dos2unix gcc gcc-c++ device-mapper-persistent-data lvm2 ipvsadm ipset"

# master1 配置为时间服务器

# 安装ntp服务

rpm -ivh autogen-libopts-5.18-5.el7.x86_64.rpm

rpm -ivh ntp-4.2.6p5-29.el7.centos.2.x86_64.rpm

systemctl start ntpd && systemctl enable ntpd

# 配置ntp.conf

# 增加日志目录

echo "logfile /var/log/ntpd.log" >> /etc/ntp.conf

# 授权特定网段的主机可以从此时间服务器上查询和同步时间

echo "restrict 192.168.234.0 mask 255.255.255.0 nomodify notrap" >> /etc/ntp.conf

# 原配置文件中第21-24行可以注释掉: 修改为国内公网上的时间服务器(26、27 行);当外部时间不可用时,采用本地时间(28、29行)

sed -i s/'server 0.centos.pool.ntp.org iburst'/'#server 0.centos.pool.ntp.org iburst'/g /etc/ntp.conf

sed -i s/'server 1.centos.pool.ntp.org iburst'/'#server 1.centos.pool.ntp.org iburst'/g /etc/ntp.conf

sed -i s/'server 2.centos.pool.ntp.org iburst'/'#server 2.centos.pool.ntp.org iburst'/g /etc/ntp.conf

sed -i s/'server 3.centos.pool.ntp.org iburst'/'#server 3.centos.pool.ntp.org iburst'/g /etc/ntp.conf

cat >> /etc/ntp.conf <<EOF

server ntp1.aliyun.com

server 127.127.1.0 #local clock

fudge 127.127.1.0 stratum 10

EOF

# 重启服务并同步时间

systemctl restart ntpd

ntpdate -u ntp1.aliyun.com

# 其他节点添加时间同步定时任务

ansible k8s -m cron -a 'name="ntpdate" minute=*/1 job="/usr/sbin/ntpdate master1"'

# 验证

ansible k8s -m shell -a "crontab -l"

192.168.234.53 | CHANGED | rc=0 >>

#Ansible: ntpdate

*/1 * * * * ntpdate master1

192.168.234.52 | CHANGED | rc=0 >>

#Ansible: ntpdate

*/1 * * * * ntpdate master1

192.168.234.60 | CHANGED | rc=0 >>

#Ansible: ntpdate

*/1 * * * * ntpdate master1

# 升级内核

## 离线升级

# 准备离线升级包(在可连接外网机器下载)

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-5.4.214-1.el7.elrepo.x86_64.rpm

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-devel-5.4.214-1.el7.elrepo.x86_64.rpm

# 拷贝到目标服务器安装

rpm -ivh *

grub2-set-default 0

init 6

# 批量分发升级包安装

ansible k8s -m copy -a "src=/root/kernel/ dest=/root/kernel/"

ansible k8s -m shell -a "cd /root/kernel && rpm -ivh *"

ansible k8s -m shell -a "grub2-set-default 0"

# 手动执行重启命令

# 验证

ansible k8s -m shell -a "uname -r"

uname -r

5.4.214-1.el7.elrepo.x86_64

## 在线升级

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

yum --enablerepo=elrepo-kernel install kernel-ml kernel-ml-devel -y

grub2-set-default 0

init 6

# 修改内核参数

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

# 分发内核参数文件

ansible k8s -m copy -a "src=/etc/sysctl.d/k8s.conf dest=/etc/sysctl.d/k8s.conf"

# 配置

ansible k8s -m shell -a "modprobe br_netfilter"

ansible k8s -m shell -a "sysctl -p /etc/sysctl.d/k8s.conf"

192.168.234.52 | CHANGED | rc=0 >>

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

192.168.234.51 | CHANGED | rc=0 >>

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

192.168.234.53 | CHANGED | rc=0 >>

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

192.168.234.60 | CHANGED | rc=0 >>

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

# 配置ulimit

ansible k8s -m shell -a "ulimit -SHn 65535"

ansible k8s -m shell -a 'echo "* soft nofile 65535" >> /etc/security/limits.conf'

ansible k8s -m shell -a 'echo "* hard nofile 65535" >> /etc/security/limits.conf'

# 配置ipvs

ansible k8s -m shell -a "yum -y install ipvsadm ipset"

# 创建ipvs设置脚本

cat > /etc/sysconfig/modules/ipvs.modules << EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

# 分发脚本

ansible k8s -m copy -a "src=/etc/sysconfig/modules/ipvs.modules dest=/etc/sysconfig/modules/ipvs.modules"

# 执行脚本,验证修改结果

ansible k8s -m shell -a "chmod 755 /etc/sysconfig/modules/ipvs.modules"

ansible k8s -m shell -a "bash /etc/sysconfig/modules/ipvs.modules"

ansible k8s -m shell -a "lsmod | grep -e ip_vs -e nf_conntrack_ipv4"

# 批量安装K8S软件

ansible k8s -m shell -a "yum install -y kubelet-1.23.0 kubeadm-1.23.0 kubectl-1.23.0"Nginx+Keepalived 负载均衡部署(master节点部署)

# 分发安装包到master节点

ansible master -m copy -a "src=/root/nginx-1.20.1-1.el7.ngx.x86_64.rpm dest=/root/nginx-1.20.1-1.el7.ngx.x86_64.rpm"

# 安装nginx

ansible master -m shell -a "rpm -ivh /root/nginx-1.20.1-1.el7.ngx.x86_64.rpm"

# 验证安装

ansible master -m shell -a "nginx -V"

# 修改Nginx配置,将下面的配置粘贴到/root/nginx.conf

user nobody;

worker_processes 4;

worker_rlimit_nofile 40000;

error_log /var/log/nginx/error.log;

events {

worker_connections 1024;

}

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

least_conn;

server 192.168.234.51:6443 max_fails=3 fail_timeout=5s;

server 192.168.234.52:6443 max_fails=3 fail_timeout=5s;

server 192.168.234.53:6443 max_fails=3 fail_timeout=5s;

}

server {

listen 16443;

proxy_pass k8s-apiserver;

}

}

# 分发配置文件

ansible master -m copy -a "src=/root/nginx.conf dest=/etc/nginx/nginx.conf"

# 启动nginx并验证

ansible master -m shell -a "systemctl restart nginx && systemctl enable nginx && netstat -tnlp | grep 16443"

192.168.234.51 | CHANGED | rc=0 >>

tcp 0 0 0.0.0.0:16443 0.0.0.0:* LISTEN 3016/nginx: master

192.168.234.52 | CHANGED | rc=0 >>

tcp 0 0 0.0.0.0:16443 0.0.0.0:* LISTEN 2523/nginx: master

192.168.234.53 | CHANGED | rc=0 >>

tcp 0 0 0.0.0.0:16443 0.0.0.0:* LISTEN 2478/nginx: master

# 批量安装keepalived

ansible master -m shell -a "yum install keepalived -y"

# 启动keepalived

ansible master -m shell -a "systemctl restart keepalived && systemctl enable keepalived"

# 配置keepalived

## 主

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33 # 修改为实际网卡名

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟IP

virtual_ipaddress {

192.168.234.50/24

}

track_script {

check_nginx

}

}

EOF

## 备

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id NGINX_BACKUP_1

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33 # 修改为实际网卡名

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 90 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟IP

virtual_ipaddress {

192.168.234.50/24

}

track_script {

check_nginx

}

}

EOF

## 备2

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id NGINX_BACKUP_2

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33 # 修改为实际网卡名

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 80 # 优先级,备1服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟IP

virtual_ipaddress {

192.168.234.50/24

}

track_script {

check_nginx

}

}

EOF

# 配置脚本check_nginx.sh

# 复制一下内容到/etc/keepalived/check_nginx.sh

#!/bin/bash

count=$(ss -antp |grep 16443 |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl restart nginx || systemctl stop keepalived

else

exit 0

fi

# 分发到master节点

chmod +x /etc/keepalived/check_nginx.sh

ansible master -m copy -a "src=/etc/keepalived/check_nginx.sh dest=/etc/keepalived/check_nginx.sh"

# 重启keepalived

ansible master -m shell -a "systemctl restart keepalived"

# 验证

# 此时master1应该有两个地址

# 现在手动停止master1的keepalived

systemctl stop keepalived

# VIP 现在应该漂移到master2上安装Docker

# 批量安装Docker

ansible k8s -m shell -a "yum install docker-ce -y"

# 验证

ansible k8s -m shell -a "systemctl start docker && systemctl enable docker"

ansible k8s -m shell -a "docker info | grep Version"

192.168.234.53 | CHANGED | rc=0 >>

Server Version: 20.10.18

Cgroup Version: 1

Kernel Version: 5.4.214-1.el7.elrepo.x86_64

192.168.234.52 | CHANGED | rc=0 >>

Server Version: 20.10.18

Cgroup Version: 1

Kernel Version: 5.4.214-1.el7.elrepo.x86_64

192.168.234.60 | CHANGED | rc=0 >>

Server Version: 20.10.18

Cgroup Version: 1

Kernel Version: 5.4.214-1.el7.elrepo.x86_64

192.168.234.51 | CHANGED | rc=0 >>

Server Version: 20.10.18

Cgroup Version: 1

Kernel Version: 5.4.214-1.el7.elrepo.x86_64

# 配置docker

# vim /etc/docker/daemon.json

cat > /etc/docker/daemon.json << EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"data-root": "修改为数据盘挂载目录"

}

EOF

# 分发配置文件

ansible k8s -m copy -a "src=/etc/docker/daemon.json dest=/etc/docker/daemon.json"

ansible k8s -m shell -a "systemctl restart docker"kubeadm方式部署

拉取镜像脚本,需要在外网下载镜像之后导入

# 拉取镜像

!/bin/bash

# 获取要拉取的镜像信息,images.txt是临时文件

kubeadm --kubernetes-version=v1.23.0 --image-repository=registry.aliyuncs.com/google_containers config images list > images.txt

# 替换成registry.aliyuncs.com/google_containers的仓库,该仓库国内可用,和k8s.gcr.io的更新时间只差一两天

# sed -i 's@k8s.gcr.io@registry.aliyuncs.com/google_containers@g' images.txt

# 拉取各镜像

cat images.txt | while read line

do

docker pull $line

done

# 导出镜像到本地

docker save $(docker images | grep -v REPOSITORY | awk 'BEGIN{OFS=":";ORS=" "}{print $1,$2}') -o /root/k8s.tar

# 分发镜像到各节点

ansible k8s -m copy -a "src=/root/k8s.tar dest=/root/k8s.tar"

# 装载镜像

ansible k8s -m shell -a "docker load -i /root/k8s.tar"

# 修改镜像tag为k8s.gcr.io仓库,并删除registry.aliyuncs.com/google_containers的tag

#sed -i 's@registry.aliyuncs.com/google_containers/@@g' images.txt

#cat images.txt | while read line

#do

# docker tag registry.aliyuncs.com/google_containers/$line k8s.gcr.io/$line

# docker rmi -f registry.aliyuncs.com/google_containers/$line

#done

# 操作完后显示本地docker镜像

ansible k8s -m shell -a "docker images"安装K8S

kubeadm 初始化

kubeadm init 的工作流

kubeadm init 命令通过执行下列步骤来启动一个 Kubernetes Master:

- 预检测系统状态:当出现 ERROR 时就退出 kubeadm,除非问题得到解决或者显式指定了 --ignore-preflight-errors=<错误列表> 参数。此外,也会出现 WARNING。

- 生成一个自签名的 CA 证书来为每个系统组件建立身份标识:可以显式指定 --cert-dir CA 中心目录(默认为 /etc/kubernetes/pki),在该目录下方式 CA 证书、密钥等文件。API Server 证书将为任何 --apiserver-cert-extra-sans 参数值提供附加的 SAN 条目,必要时将其小写。

- 将 kubeconfig 文件写入 /etc/kubernetes/ 目录:以便 kubelet、Controller Manager 和 Scheduler 用来连接到 API Server,它们都有自己的身份标识,同时生成一个名为 admin.conf 的独立的 kubeconfig 文件,用于管理操作。

- 为 API Server、Controller Manager 和 Scheduler 生成 static Pod 的清单文件:存放在 /etc/kubernetes/manifests 下,kubelet 会轮训监视这个目录,在启动 Kubernetes 时用于创建系统组件的 Pod。假使没有提供一个外部的 etcd 服务的话,也会为 etcd 生成一份额外的 static Pod 清单文件。

待 Master 的 static Pods 都运行正常后,kubeadm init 的工作流程才会继续往下执行。

- 对 Master 使用 Labels 和 Stain mark(污点标记):以此隔离生产工作负载不会调度到 Master 上。

生成 Token:将来其他的 Node 可使用该 Token 向 Master 注册自己。也可以显式指定 --token 提供 Token String。为了使 Node 能够遵照 启动引导令牌(Bootstrap Tokens)和 TLS 启动引导(TLS bootstrapping)这两份文档中描述的机制加入到 Cluster 中,kubeadm 会执行所有的必要配置:

- 创建一个 ConfigMap 提供添加 Node 到 Cluster 中所需的信息,并为该 ConfigMap 设置相关的 RBAC 访问规则。

- 允许启动引导令牌访问 CSR 签名 API。

- 配置自动签发新的 CSR 请求。

- 通过 API Server 安装一个 DNS 服务器(CoreDNS)和 kube-proxy:

a. 注意,尽管现在已经部署了 DNS 服务器,但直到安装 CNI 时才调度它。

执行初始化

注意 1:因为我们要部署高可用集群,所以必须使用选项 --control-plane-endpoint 指定 API Server 的 HA Endpoint。

注意 2:由于 kubeadm 默认从 k8s.grc.io 下载所需镜像,因此可以通过 --image-repository 指定阿里云的镜像仓库。离线部署则使用本地镜像(需要提前导入镜像)

注意 3:如果显式指定 --upload-certs,则意味着在扩展冗余 Master 时,你必须要手动地将 CA 证书从主控制平面节点复制到将要加入的冗余控制平面节点上,推荐使用。

# kubeadm初始化

kubeadm init \

--control-plane-endpoint "192.168.234.50" \

--kubernetes-version "1.23.0" \

--pod-network-cidr "10.0.0.0/8" \

--service-cidr "172.16.0.0/16" \

--image-repository registry.aliyuncs.com/google_containers \

--upload-certs

# 配置 kubectl

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7ff77c879f-fh9vb 0/1 Pending 0 23m

coredns-7ff77c879f-qmk7z 0/1 Pending 0 23m

etcd-k8s-master-1 1/1 Running 0 24m

kube-apiserver-k8s-master-1 1/1 Running 0 24m

kube-controller-manager-k8s-master-1 1/1 Running 0 24m

kube-proxy-7hx55 1/1 Running 0 23m

kube-scheduler-k8s-master-1 1/1 Running 0 24m

# 配置kubelet开机启动

ansible k8s -m shell -a "systemctl enable kubelet"

# 测试 API Server LB 是否正常

nc -v 192.168.234.50 16443

Connection to 192.168.234.50 port 16443 [tcp/sun-sr-https] succeeded!# kubeadm 初始化日志

[init] Using Kubernetes version: v1.23.0

[preflight] Running pre-flight checks

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master1.k8s.com] and IPs [172.16.0.1 192.168.234.51 192.168.234.50]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master1.k8s.com] and IPs [192.168.234.51 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master1.k8s.com] and IPs [192.168.234.51 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 21.003937 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.23" in namespace kube-system with the configuration for the kubelets in the cluster

NOTE: The "kubelet-config-1.23" naming of the kubelet ConfigMap is deprecated. Once the UnversionedKubeletConfigMap feature gate graduates to Beta the default name will become just "kubelet-config". Kubeadm upgrade will handle this transition transparently.

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

f85a83097e59b3a3db18cdaea8e6fee26f08ec08d8b4194d800351573f4987b8

[mark-control-plane] Marking the node master1.k8s.com as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master1.k8s.com as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 0r0krs.sm74rmj923is338p

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.234.50:6443 --token 0r0krs.sm74rmj923is338p \

--discovery-token-ca-cert-hash sha256:6c7e4cf98fe230dbec2a3ed9f04c16bd9357594a79efd947aef670c4b18198fc \

--control-plane --certificate-key f85a83097e59b3a3db18cdaea8e6fee26f08ec08d8b4194d800351573f4987b8

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.234.50:6443 --token 0r0krs.sm74rmj923is338p \

--discovery-token-ca-cert-hash sha256:6c7e4cf98fe230dbec2a3ed9f04c16bd9357594a79efd947aef670c4b18198fc添加节点

添加Master节点

# 添加其他master节点

kubeadm join 192.168.234.50:6443 --token 0r0krs.sm74rmj923is338p \

--discovery-token-ca-cert-hash sha256:6c7e4cf98fe230dbec2a3ed9f04c16bd9357594a79efd947aef670c4b18198fc \

--control-plane --certificate-key f85a83097e59b3a3db18cdaea8e6fee26f08ec08d8b4194d800351573f4987b8

# 添加完成后验证

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1.k8s.com NotReady control-plane,master 13m v1.23.0

master2.k8s.com NotReady control-plane,master 3m51s v1.23.0

master3.k8s.com NotReady control-plane,master 2m1s v1.23.0

# 验证pod

$ kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6d8c4cb4d-gnz6l 0/1 Pending 0 12m

kube-system coredns-6d8c4cb4d-zcjtp 0/1 Pending 0 12m

kube-system etcd-master1.k8s.com 1/1 Running 0 13m

kube-system etcd-master2.k8s.com 1/1 Running 0 3m3s

kube-system etcd-master3.k8s.com 1/1 Running 0 72s

kube-system kube-apiserver-master1.k8s.com 1/1 Running 0 13m

kube-system kube-apiserver-master2.k8s.com 1/1 Running 0 3m3s

kube-system kube-apiserver-master3.k8s.com 1/1 Running 0 72s

kube-system kube-controller-manager-master1.k8s.com 1/1 Running 1 (2m53s ago) 13m

kube-system kube-controller-manager-master2.k8s.com 1/1 Running 0 3m3s

kube-system kube-controller-manager-master3.k8s.com 1/1 Running 0 73s

kube-system kube-proxy-6bbtx 1/1 Running 0 74s

kube-system kube-proxy-72khg 1/1 Running 0 3m4s

kube-system kube-proxy-d46wf 1/1 Running 0 12m

kube-system kube-scheduler-master1.k8s.com 1/1 Running 1 (2m53s ago) 13m

kube-system kube-scheduler-master2.k8s.com 1/1 Running 0 3m3s

kube-system kube-scheduler-master3.k8s.com 1/1 Running 0 72s添加worker节点

# 添加worker节点

kubeadm join 192.168.234.50:6443 --token 0r0krs.sm74rmj923is338p \

--discovery-token-ca-cert-hash sha256:6c7e4cf98fe230dbec2a3ed9f04c16bd9357594a79efd947aef670c4b18198fc

# 节点验证(master执行)

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1.k8s.com NotReady control-plane,master 54m v1.23.0

master2.k8s.com NotReady control-plane,master 43m v1.23.0

master3.k8s.com NotReady control-plane,master 42m v1.23.0

node1.k8s.com NotReady <none> 8m14s v1.23.0Token过期处理办法

# 重新生成新的token

kubeadm token create

kubeadm token list

# 获取ca证书sha256编码hash值

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'部署插件

部署CNI网络插件

注意:

- Pod 网络不得与任何主机网络重叠:所以我们在执行 kubeadm init 时显式指定了--pod-network-cidr 参数。

- 确保 CNI 网络插件支持 RBAC(基于角色的访问控制)。

- 确保 CNI 支持 IPv6 或 IPv4v6,当你需要使用的时候。

- 在 OpenStack 环境中要关闭 “端口安全” 功能,否则 Calico IPIP 隧道无法打通。

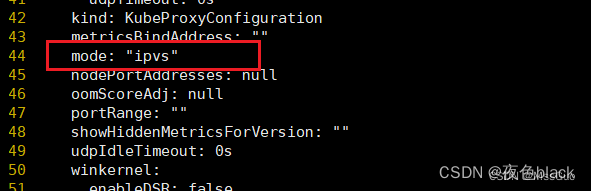

# 部署网络插件前先开启kube-proxy ipvs模式

kubectl edit configmaps kube-proxy -n kube-system

# 修改mode 为 ipvs

# 修改后重启kube-proxy

kubectl rollout restart daemonset kube-proxy -n kube-system

# 使用kubectl get nodes查看节点时,所有节点都是NotReady状态,这是因为还没有部署CNI插件导致的

# 使用calico网络插件

## 下载calico的yaml文件

wget https://docs.projectcalico.org/manifests/calico.yaml

# 部署

$ kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created

# 验证

$ kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-66966888c4-txjk7 1/1 Running 0 3m

kube-system calico-node-ff7b4 1/1 Running 0 3m1s

kube-system calico-node-lqp8l 1/1 Running 0 3m1s

kube-system calico-node-vsvrf 1/1 Running 0 3m1s

kube-system calico-node-wrnnz 1/1 Running 0 3m1s

kube-system coredns-6d8c4cb4d-gnz6l 1/1 Running 0 130m

kube-system coredns-6d8c4cb4d-zcjtp 1/1 Running 0 130m

kube-system etcd-master1.k8s.com 1/1 Running 1 (13m ago) 131m

kube-system etcd-master2.k8s.com 1/1 Running 1 120m

kube-system etcd-master3.k8s.com 1/1 Running 2 (60s ago) 119m

kube-system kube-apiserver-master1.k8s.com 1/1 Running 1 (14m ago) 131m

kube-system kube-apiserver-master2.k8s.com 1/1 Running 1 (12m ago) 120m

kube-system kube-apiserver-master3.k8s.com 1/1 Running 3 (61s ago) 119m

kube-system kube-controller-manager-master1.k8s.com 1/1 Running 4 (101s ago) 131m

kube-system kube-controller-manager-master2.k8s.com 1/1 Running 4 (58s ago) 120m

kube-system kube-controller-manager-master3.k8s.com 1/1 Running 1 (2m6s ago) 119m

kube-system kube-proxy-6bbtx 1/1 Running 0 119m

kube-system kube-proxy-72khg 1/1 Running 0 120m

kube-system kube-proxy-7mlpc 1/1 Running 0 85m

kube-system kube-proxy-d46wf 1/1 Running 0 130m

kube-system kube-scheduler-master1.k8s.com 1/1 Running 5 (66s ago) 131m

kube-system kube-scheduler-master2.k8s.com 1/1 Running 3 (2m34s ago) 120m

kube-system kube-scheduler-master3.k8s.com 1/1 Running 1 (15m ago) 119m

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1.k8s.com Ready control-plane,master 131m v1.23.0

master2.k8s.com Ready control-plane,master 121m v1.23.0

master3.k8s.com Ready control-plane,master 119m v1.23.0

node1.k8s.com Ready <none> 85m v1.23.0

# 此时所有节点都应该是Ready状态部署Dashboard

# 部署Dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml

# 修改svc为NodePort

$ vi recommended.yaml

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30000

selector:

k8s-app: kubernetes-dashboard

# 部署

$ kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

Warning: spec.template.metadata.annotations[seccomp.security.alpha.kubernetes.io/pod]: deprecated since v1.19, non-functional in v1.25+; use the "seccompProfile" field instead

deployment.apps/dashboard-metrics-scraper created

# 验证

$ kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-79459f84f-c5fgz 1/1 Running 0 51s

kubernetes-dashboard-76dc96b85f-btbsz 1/1 Running 0 52s

$ kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 172.16.176.90 <none> 8000/TCP 79s

kubernetes-dashboard NodePort 172.16.25.76 <none> 443:30000/TCP 81s

# 访问 https://任意节点IP:30000

# 创建dashboard管理员

$ cat dashboard-admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: dashboard-admin

namespace: kubernetes-dashboard

$ kubectl create -f dashboard-admin.yaml

serviceaccount/dashboard-admin created

# 分配权限

$ cat dashboard-admin-bind-cluster-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin-bind-cluster-role

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kubernetes-dashboard

$ kubectl create -f dashboard-admin-bind-cluster-role.yaml

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin-bind-cluster-role created

# 查看token

$ kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep dashboard-admin | awk '{print $1}')

Name: dashboard-admin-token-756kz

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 76ecffae-7c97-45c6-96e6-5d5a967300dd

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1099 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IlRKeGIxcVpXY1FGVDBtMy1Oc0hMSl9OdFM5U3N1OFVfY3ZURlhNZjNyRmcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tNzU2a3oiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNzZlY2ZmYWUtN2M5Ny00NWM2LTk2ZTYtNWQ1YTk2NzMwMGRkIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmRhc2hib2FyZC1hZG1pbiJ9.n0hetvvxwx4vh6i3MYVNnzCus5pF5XwYc1-q99z-dgDuHKRJ8F0QtymliAF-LBP26sRB2SBh0AkCjl7nSIm08zoK3Bi9PCjEJbD5e1ppOaGEaoYAGW3UApBb44qiIxaoV8DoDTacmjO_cF2SIo24A5z9Q3WdC2pHXlXTqM77p-JjKqOV4_hsZrSMucXpHf0d1nXfYW4sTr5hPLRW2QBodAljMvHsSJ7WXy4UnaAk8S8yiQxRfxfJ4B3_AsFJF6T-TaIZoxlVmNKzzH0Y-P-IqexKqHYkQp6IIARyqFfhcOR1LBmYnKcxhPJziTMlqqRePkFhD2xQBg7RpyfK-dPdiQ

# 使用token登录即可启用 API Aggregator

API Aggregation 允许在不修改 Kubernetes 核心代码的同时扩展 Kubernetes API,即:将第三方服务注册到 Kubernetes API 中,这样就可以通过 Kubernetes API 来访问第三方服务了,例如:Metrics Server API。

注:另外一种扩展 Kubernetes API 的方法是使用 CRD(Custom Resource Definition,自定义资源定义)。

检查 API Server 是否开启了 Aggregator Routing:查看 API Server 是否具有--enable-aggregator-routing=true 选项。

# 检查是否开启 Aggregator Routing

$ ps -ef | grep apiserver

root 86688 86663 9 11:30 ? 00:02:13 kube-apiserver --advertise-address=192.168.234.51 --allow-privileged=true --authorization-mode=Node,RBAC --client-ca-file=/etc/kubernetes/pki/ca.crt --enable-admission-plugins=NodeRestriction --enable-bootstrap-token-auth=true --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key --etcd-servers=https://127.0.0.1:2379 --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key --requestheader-allowed-names=front-proxy-client --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-group-headers=X-Remote-Group --requestheader-username-headers=X-Remote-User --secure-port=6443 --service-account-issuer=https://kubernetes.default.svc.cluster.local --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-account-signing-key-file=/etc/kubernetes/pki/sa.key --service-cluster-ip-range=172.16.0.0/16 --tls-cert-file=/etc/kubernetes/pki/apiserver.crt --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

# 修改每个master节点 API Server 的 kube-apiserver.yaml 配置开启 Aggregator Routing:修改 manifests 配置后会 API Server 会自动重启生效。

$ vim /etc/kubernetes/manifests/kube-apiserver.yaml

...

spec:

containers:

- command:

...

- --enable-aggregator-routing=true部署metrics server

# 部署metrics server

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.4.1/components.yaml

# 修改配置

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --kubelet-insecure-tls # 跳过tls验证

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.4.1 # 修改镜像

imagePullPolicy: IfNotPresent

# 部署

$ kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

# 验证

$ kubectl get pods -A | grep metrics-server

kube-system metrics-server-5f69c95c86-sdpv9 1/1 Running 0 56s

$ kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master1.k8s.com 333m 16% 1255Mi 67%

master2.k8s.com 268m 13% 1161Mi 62%

master3.k8s.com 226m 11% 1165Mi 62%

node1.k8s.com 154m 7% 909Mi 48%其他

# kubectl命令补全

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

评论 (0)